In recent years, after Google’s AlphaGo defeated South Korea’s chess player Li Shiying, machine learning, especially deep learning, has engulfed the entire IT community. All internet companies, especially giants such as Google, Microsoft, Baidu, and Tencent, are all involved in the deployment of artificial intelligence technologies and markets. Baidu, Tencent, Alibaba, Jingdong, and other Internet giants have even made high-paying artificial intelligence talents in Silicon Valley. Now in Beijing, as long as it is a machine learning algorithm, at least a monthly salary of 20k, or even more than 100k......

It is true that the era of the new era has come. We are moving from the Internet to the mobile Internet, and now we are moving from the mobile Internet to the era of artificial intelligence. Some in the industry claimed that this time the outbreak of artificial intelligence was the opening of the Internet 3.0 era. Therefore, IT engineers who are engaged in IT development do not understand machine learning and are equivalent to low-level programmers. Quickly learn from the basics, get started with machine learning, and walk into the door of artificial intelligence...

1. Three or three falls of artificial intelligence

In the 50s and 70s of the 20th century, after artificial intelligence was proposed, it attempted to simulate human intelligence. However, it was gradually cooled due to oversimplified algorithms, the lack of theory that was difficult to deal with uncertain environments, and the limitation of computational capabilities.

In the 1980s, the key application of artificial intelligence - the expert system was developed, but with less data, it was difficult to capture the tacit knowledge of experts. The complexity and cost of building and maintaining large-scale systems also made artificial intelligence gradually out of mainstream computer science. Pay attention.

In the 1990s, technologies such as neural networks and genetic algorithms “evolved†out many best solutions to the problems. In the first 10 years of the 21st century, they revived various elements of the artificial intelligence research process, such as Moore's Law, big data, and clouds. Calculations and new algorithms have driven artificial intelligence into a period of rapid growth in the 20th century in the 20th century. It is expected that in the next decade, the eruption of the singularity era will usher in some insurmountable perplexities.

2. Why the New Wave Rise

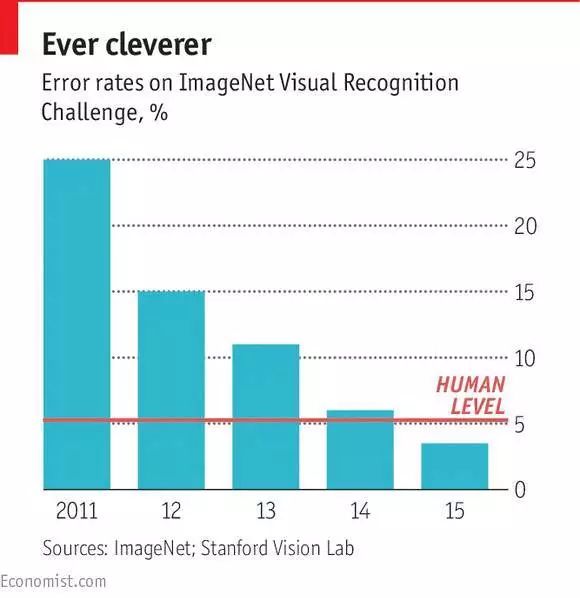

Since the advent of artificial intelligence (AI), it was once arrogant and disappointing. How did it suddenly become the hottest technology area today? This phrase first appeared in a 1956 study plan. The plan wrote: “As long as a group of scientists are carefully selected and allowed to study together for a summer, significant progress can be made to enable the machine to solve the problems that only humans can solve.†To say the least, this view is overly optimistic. Despite some progress, AI has become synonymous with overstatement in people's minds, so that researchers basically avoid using this term, preferring to use "expert system" or "neural network" instead. The “AI†rehabilitated and current boom can be traced back to the 2012 ImageNet Challenge online competition.

ImageNet is an online database containing millions of images, all manually labeled. The annual ImageNet Challenge competition aims to encourage researchers in the field to compete and measure their progress in computer automatic recognition and marking of images. Their system first uses a set of properly labeled images for training and then accepts the challenge to mark test images that have never been seen before.

In the following seminars, the winners shared and discussed their technology. In 2010, the accuracy of the winning system marker image was 72% (95% on average for humans). In 2012, a team led by Geoff Hinton, a professor at the University of Toronto, significantly improved accuracy by 85% with a new technology called Deep Learning. Later in the 2015 ImageNet Challenge competition, this technology further improved accuracy to 96%, surpassing humans for the first time.

Well, it all comes down to a concept: "Deep Learning." Although before 2016, deep learning technology has started to heat up, the real big explosion was the man-machine five-turn chess battle between artificial intelligence robot AlphaGo and Go 9-segment player Li Shishi held by Google in 2016 in Seoul, South Korea. The strongest player lost to the robot. At some time, people thought that Go was the final dignity position of the human chess game, so that it was easy to capture the final position of human intelligence in artificial intelligence! This incident shocked everyone.

Since then, the global academic and industrial world have shaken and the giants have stepped up their efforts to deploy artificial intelligence: Google has tapped the founder of the neural network algorithm, Geoffrey Hinton, the father of deep learning; Facebook has dug out the students of Hinton. Yann LeCun, the founder of the Neural Network (CNN); however, in less than a year, Microsoft also said that Yoshua Bengio, the last of the three major players in the deep learning field that has remained neutral in the academic community. Of course, the domestic Internet giants, Baidu, Ali, Tencent, Jingdong, Didi, and the United States, are also deploying AI. Baidu is considered to be All In on AI.

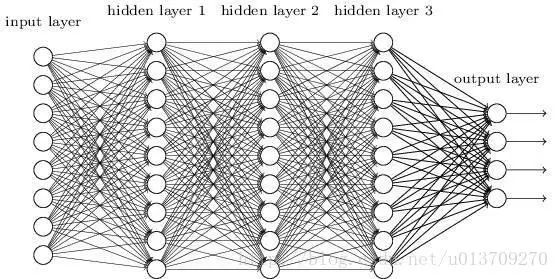

Deep Neural Network (DNN)

3. Machine learning is your only way

In order to get started with AI, machine learning is a must to learn. It can be said that machine learning is the cornerstone and essence of artificial intelligence. Only by learning the principles and ideas of machine learning algorithms, you can truly count on artificial intelligence. However, how can you get started with non-professionals? This question is actually very difficult to answer, because each person's goal is different, the technical foundation and the basis of mathematics are also different, so vary from person to person. However, in general, the necessary knowledge for learning machine learning algorithms can still be listed.

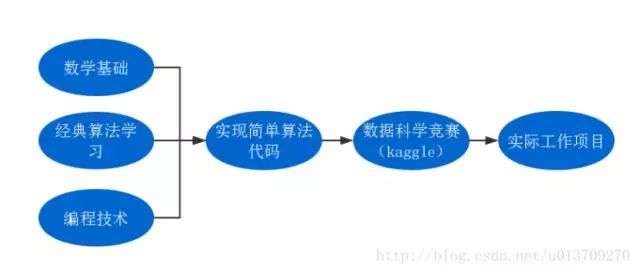

The basics of machine learning

Machine Learning Process

For the above figure, the reason why the left side was written in three parallel parts: "matrix basics", "classical algorithm learning" and "programming technology" is because machine learning is a field that closely combines mathematics, algorithm theory, and engineering practice. It needs to be solid. The theoretical basis helps guide data analysis and model tuning. It also requires superb engineering development capabilities to efficiently train and deploy models and services.

People engaged in machine learning in the Internet field basically belong to the following two backgrounds: most of them are programmers, and this type of children's shoes engineering experience is relatively more; the other part is the field of mathematics statistics, this part of the theoretical basis of children's shoes is relatively Solid. Therefore, in comparison with the above figure, these two types of children's shoes are not the same as the parts that need to be strengthened.

mathematics

There were countless passionate students who had vowed to work in the field of machine learning. When they saw the formula, they suddenly felt that they had brought them. Yes, the main reason why machine learning is so high and seems to be high is mathematics.

Each algorithm needs to have a maximum degree of fit on the training set while guaranteeing generalization ability. It requires continuous analysis of results and data, and tuning of parameters. This requires us to have a certain understanding of the data distribution and the underlying mathematics of the model. Fortunately, if you just want to apply machine learning rationally rather than doing sophisticated research in the relevant direction, the required mathematics knowledge can be easily understood by reading these undergraduate science and technology children's shoes.

The basic mathematics required for all basic machine learning algorithms is concentrated in calculus, linear algebra, and probability and statistics. Below, we will first focus on knowledge. The later part of the article will introduce some materials that help to learn and consolidate this knowledge.

calculus

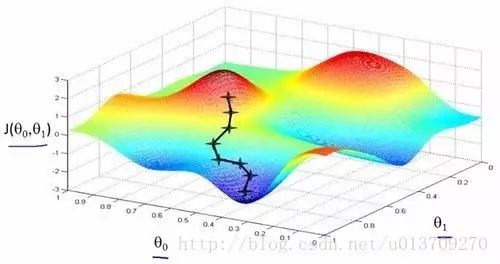

Differential calculus and its geometric and physical meaning are the core of the solution process for most algorithms in machine learning. For example, gradient descent method and Newton method are used in the algorithm. If we have a good understanding of its geometric meaning, we can understand that "gradient descent is to approximate a part with a plane, Newton's method is to approximate the local with a surface," and can better understand the use of such methods.

The application of convex optimization and conditional optimization knowledge in algorithms can be seen everywhere. If you have systematic learning, you will achieve a new level of understanding of the algorithm.

Gradient Descent Method Schematic

Linear algebra

Most machine-learning algorithms need to be applied, relying on efficient calculations. In this scenario, multi-layer for loops used by programmers' children's shoes are usually not feasible, and most of the loop operations can be converted to multiplication between matrices. The operation, which has a great relationship with the linear algebra. The inner product of vectors is everywhere. Matrix multiplication and decomposition appear in brush-like form in the machine learning principal component analysis (PCA) and singular value decomposition (SVD).

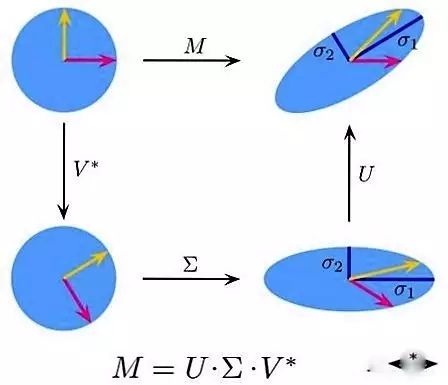

Singular value decomposition process diagram

In the field of machine learning, there are quite a number of applications that are closely related to singular value decomposition, such as PCA, which is often used for feature reduction in machine learning, and algorithms for data compression (represented by image compression), as well as search engine semantics. LSI (Latent Semantic Indexing)

Probability and Statistics

Broadly speaking, many of the things machine learning is doing are very similar to statistical analysis of data and exploring hidden patterns. So that a large part of traditional machine learning is called statistical learning theory, which fully illustrates the importance of statistics in the field of machine learning.

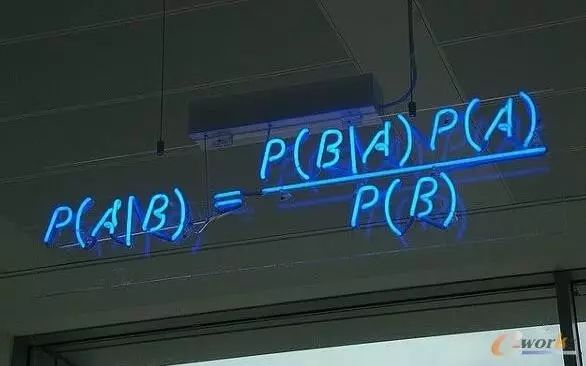

Maximal likelihood theory and Bayesian model are the theoretical foundations. Naive Bayes, Ngram, Hidden Markov (HMM) and hidden variable mixed probability models are their advanced forms. Common distributions such as Gaussian distributions are the basis for mixed Gaussian models (GMMs).

The basic principle of Naive Bayes algorithm

Classical algorithm learning

There are many classical algorithms in machine learning: Perceptron, KNN, Naive Bayes, K-Means, SVM, AdaBoost, EM, Decision Tree, Random Forest, GDBT, HMM...

With so many algorithms, how can you learn for beginners? My answer is: It is important to separate and classify. Basically, the general view of the classification of machine learning algorithms is divided into three categories: supervised learning, unsupervised learning, and reinforcement learning.

Programming technology

For programming technology learning and choice, nothing more than programming language and development environment. My personal suggestion is Python + PyCharm. The reason is very simple. Python is easy to learn and does not allow us to spend too much time on language learning (PS: The focus of learning machine learning is learning and mastering theories of machine learning algorithms). And the Python integrated development environment PyCharm developed by Jetbrains is also very easy to use.

Python and PyCharm

4. Are you really ready?

Although the current development of the AI ​​sector is hot, Shang Tang Technology B has raised a fund of US$450 million. But is this revolution an opportunity that really suits you? Practice has proved that not everyone is suitable for changing AI.

The following summary, we can self-control:

If you are mathematics, but the programming ability is very good, you once had the ambition to change the world with code. For this type of friend, I think you can change the line, but you must take the road of application of AI.

If you're good at math, but programming is weak. Congratulations, you have the inherent advantage of switching to AI. For this kind of friend, I think you can switch to AI, but you have to work hard to raise the programming level.

If you're a mathematic student, you've passed the Fields Award and have contributed 10,000 lines of core code to Apache's top projects. Congratulations, what you need in the AI ​​field is you. You are the future of Hinton, Wu Enda...

AI is popular. Are you ready?

What features you consider more when you choose an university laptop for project? Performance, portability, screen quality, rich slots with rj45, large battery, or others? There are many options on laptop for university students according application scenarios. If prefer 14inch 11th with rj45, you can take this recommended laptop for university. If like bigger screen, can take 15.6 inch 10th or 11th laptop for uni; if performance focused, jus choose 16.1 inch gtx 1650 4gb graphic laptop,etc. Of course, 15.6 inch good laptops for university students with 4th or 6th is also wonderful choice if only need for course works or entertainments.

There are many options if you do university laptop deals, just share parameters levels and price levels prefer, then will send matched details with price for you.

Other Education Laptop also available, from elementary 14 inch or 10.1 inch celeron laptop to 4gb gtx graphic laptop. You can just call us and share basic configuration interest, then right details provided immediately.

University Laptop,Laptop For University Students,University Laptop Deals,Recommended Laptop For University,Laptop For Uni

Henan Shuyi Electronics Co., Ltd. , https://www.shuyitablet.com