Many problems with traditional analog-based systems are affecting the development of digital-based systems.

This article refers to the address: http://

In the IP Video Surveillance System (VSIP), the amount of network traffic handled by the hardware is part of the camera. This is because the video signal is digitized by the camera, and in order to overcome the bandwidth limitations of the network, the signal is compressed before being transmitted to the video server. Different processor architectures, such as DSP/GPP, are desirable for maximum system performance. Interrupt-intensive tasks such as video capture, storage, and video streaming can be assigned to GPP, while MIPS-intensive video compression is done by the DSP. After the data is transferred to the video server, the server stores the compressed video stream to the hard disk as a file, which overcomes the traditional performance degradation of analog storage devices.

Various standards have been developed for digital video signal compression, which can be summarized into the following two categories:

• Still image compression—Individually encode each video frame as a still image. The most famous standard is JPEG. The MJPEG standard encodes each frame with the JPEG algorithm.

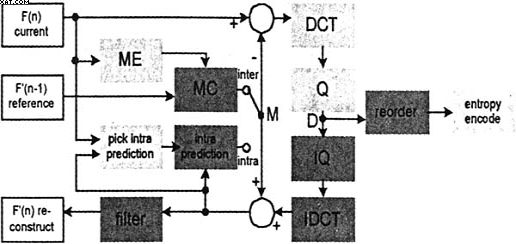

Figure 1 shows a block diagram of an H.264 encoder. Similar to other ME-based video coding standards, it processes each frame macroblock (macroblockMB) by macrocell (16 x 16 pixels). It has a forward path and a reconstruction path. The forward path coded frame is a bit. The reconstruction path produces 1 reference frame from the coded bits.

In the forward path (DCT to Q), each MB can be encoded in an intra mode or an interactive mode. In the internal mode, the reference MB is obtained by the ME module in the previous coding. In interactive mode, M is formed from the current frame sample.

The purpose of the reconstruction path (IQ to IDCT) is to ensure that the encoder and decoder make the same reference frame to produce the image. In addition, errors between the encoder and the decoder will accumulate.

Since time-series video frames often contain a lot of relevant information, the ME-based method can achieve a higher compression ratio. For example, for NTSC standard resolution (30f/s), H.264 encoders can encode video at 2mbps, achieving a ratio-averaged image quality of 60:1 compression ratio. In order to achieve similar quality, the compression ratio of MJPEG is approximately 10:1~15:1.

MJPEG has several advantages over the ME-based approach. The main advantage, JPEG requires relatively little computation and power consumption. In addition, most PCs have software that can decode and display JPEG images. MJPEG is also more effective when recording a special event (such as someone's step entry) in a single image or a small number of images. If it is not possible to guarantee network bandwidth, MJPEG is preferred. With the ME-based approach, delay/loss of 1 frame will result in delay/loss of the entire GOP, since the next frame will not be decoded until the first reference frame is available.

In a typical digital surveillance system, video is captured from a sensor, compressed video, and then streamed to a video server. It is not desirable to interrupt the video encoder task implemented on the DSP architecture because each context switch conversion contains a large number of register stores and cache switches. Therefore, a heterogeneous architecture is ideal, so video capture and data flow tasks can offload the DSP. The DSP/GPP processor examples used in video surveillance applications are described below.

When implementing digital video signal compression in a DSP/GPP SoC-based system, developers should first properly allocate functional modules to achieve good system performance.

EMAC drivers, TCP/IP network stacks and HTTP servers (working with compressed video signals flowing to the outside), and ATA drivers should all be implemented in ARM, which helps offload DSP processing. Compression should be implemented in the DSP core because it is particularly good for its VLIW architecture to handle such computationally intensive tasks.

Once the video frame is captured from the camera through the video input of the processor, the original video is compressed by performing a video encoder task, and then the compressed video is stored on the on-board hard disk.

In such a system for field application, a real-time video scene can be monitored by a PC by retrieving the data stream in the video server and decoding and displaying the data stream on the monitor. In this case, one encoded JPEG image file can be retrieved on the board via the Internet. Multiple data streams can be monitored on a single PC. It is also possible to monitor the data stream simultaneously from multiple points in the network. Unlike traditional analog systems, the VSIP Central Office can connect to video servers over a TCP/IP network and physically locate any location in the network. The single point that failed at this point becomes a digital camera, not a central office. It is also possible to dynamically configure the quality of JPEG images.

Figure 1 H.264 encoder block diagram

The Multiple Power Selection Column Loudspeaker Series which produce good clear sound and acoustical clarity for background music and paging,are suitable for indoor and outdoor applications such as stations, gardens, swimming pools,stadiums, and tennis courts, etc.

Even in extreme weather conditions, the all metal extruded aluminum alloy enclosure with cast aluminium top and bottom plates are fully dust and weatherproof

Column Speaker,Column Speakers,Sound Column, Column Loudspeaker, Aluminium Alloy Outdoor Column Speaker

Taixing Minsheng Electronic Co.,Ltd. , https://www.msloudspeaker.com