From the world-renowned Go game AlphaGo, in recent years, reinforcement learning has made remarkable achievements in the game field. Since then, chess and card games, shooting games, e-sports games such as Atari, Super Mario, StarCraft, and DOTA have all made breakthroughs and progress, becoming a hot research field.

Sudden Memories Kill~

Today I will introduce a toolkit for training and improving reinforcement learning algorithms by simulating in the arcade game "Street Fighter 3". Not only available in the MAME game emulator, this Python library can train your algorithms in the vast majority of arcade games.

The battalion commander will introduce it step by step from installation, setup to testing.

Currently this toolkit is supported on Linux systems as a wrapper for MAME. With this toolkit, you can customize the algorithm to step through the game process, while receiving each frame of data and the address value of the internal memory to track the game state, and send the action to interact with the game.

The first thing you need to prepare is:

Operating System: Linux

Python version: 3.6+

â–ŒInstallation

You can use pip to install the library by running the following code:

â–ŒStreet Fighter 3 example

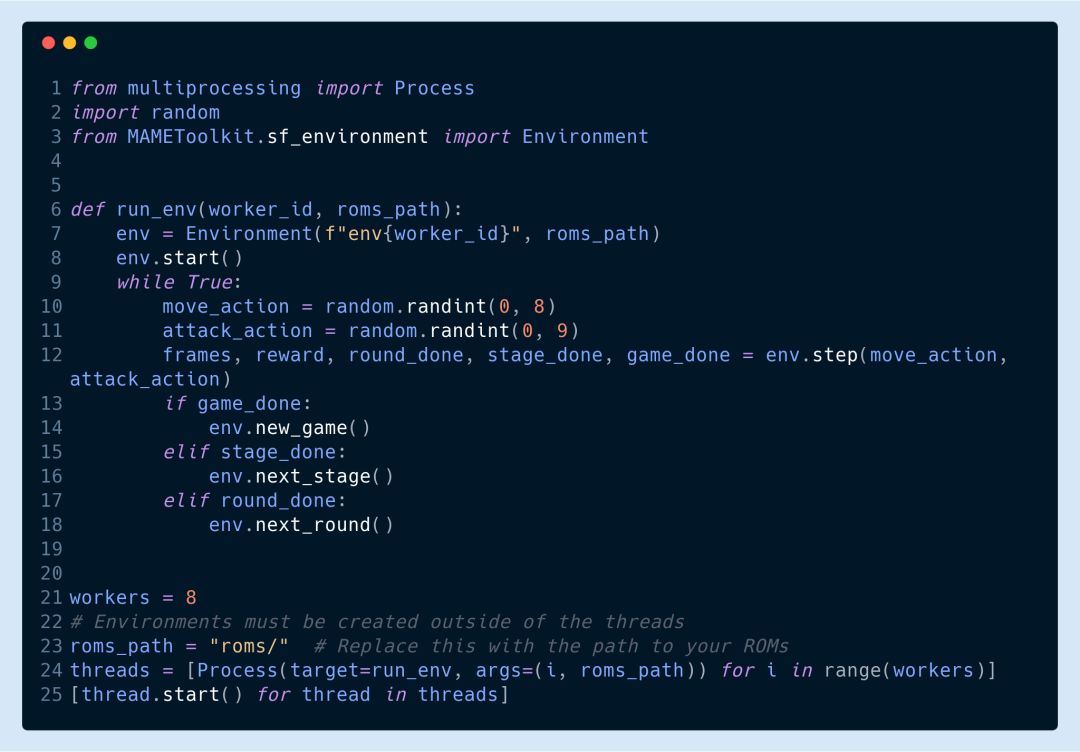

This kit is currently used in the game Street Fighter III Third Strike: Fight for the Future, and can also be used in any game on MAME. The following code demonstrates how to write a random agent in the context of Street Fighter.

Additionally, this toolkit supports hogwild training:

â–ŒGame Environment Settings

Game ID

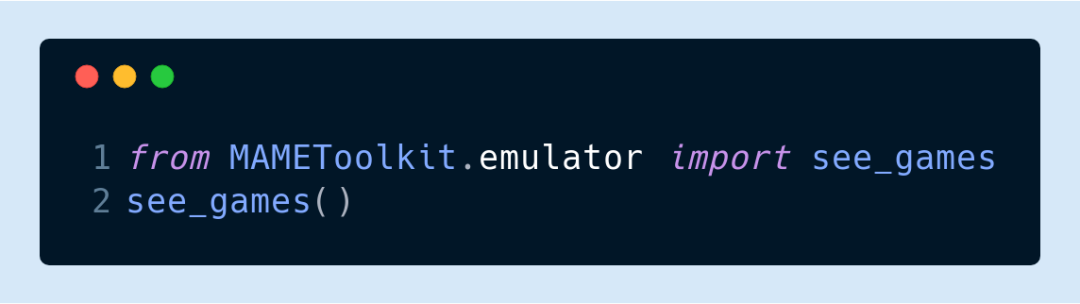

Before creating a simulation environment, you need to load the ROM of the game and obtain the game ID used by MAME. For example, the game ID for this version of Street Fighter is "sfiii3n", you can check the game ID by running the following code:

This command will open the MAME emulator and you can select the game you want from the list of games. The game's ID is usually in parentheses after the title.

memory address

Actually the toolkit doesn't need much interaction with the simulator itself, just look up the memory address associated with the internal state and keep track of the state with the chosen environment. You can use the MAME Cheat Debugger to observe how memory address values ​​change over time.

The Debugger can be run with the following command:

For more instructions on using this debugging tool, please refer to this tutorial:

https://

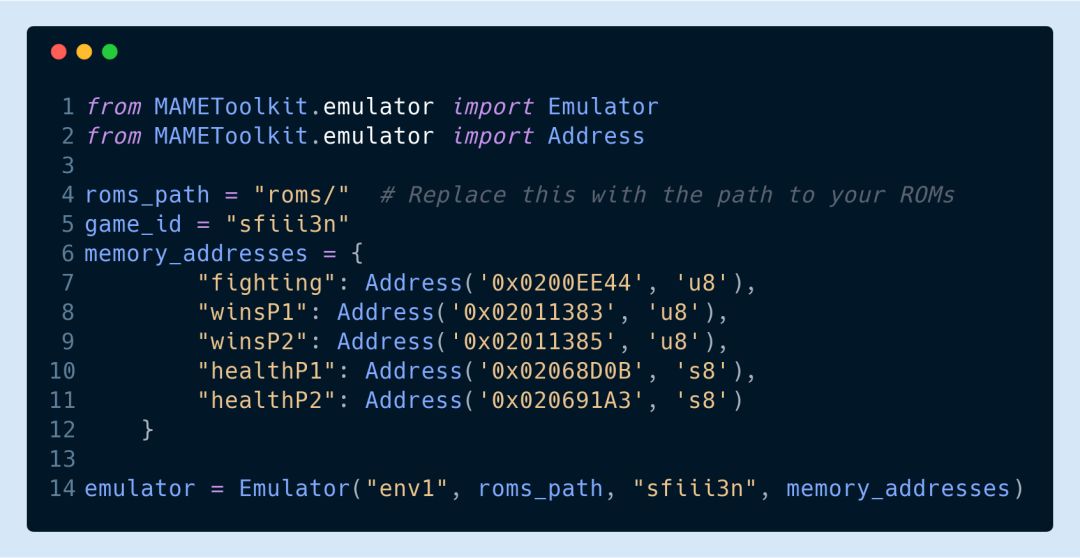

After you have determined the memory address you want to track, you can execute the following command to simulate:

This command starts the emulator and pauses while the toolkit is imported into the emulator process.

step-by-step simulation

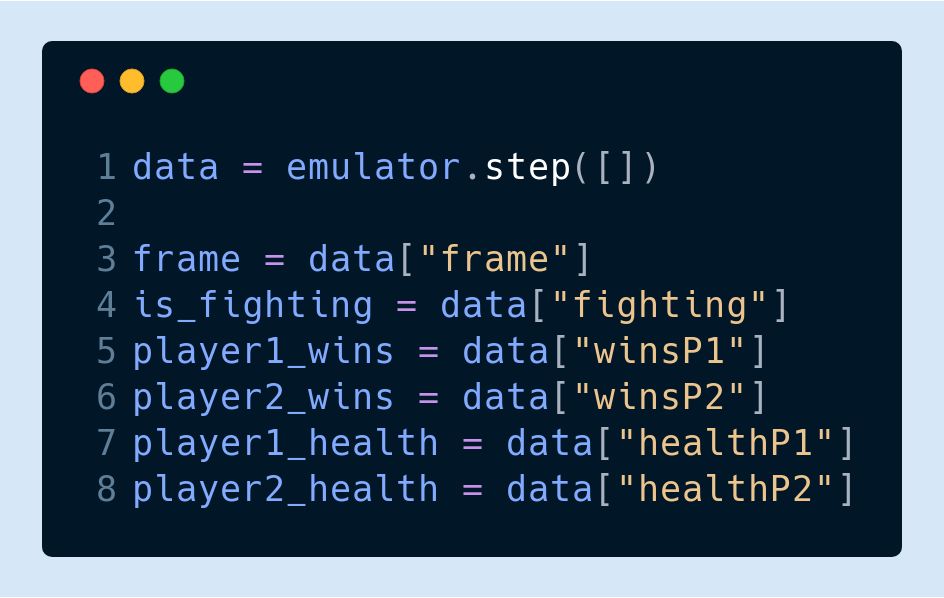

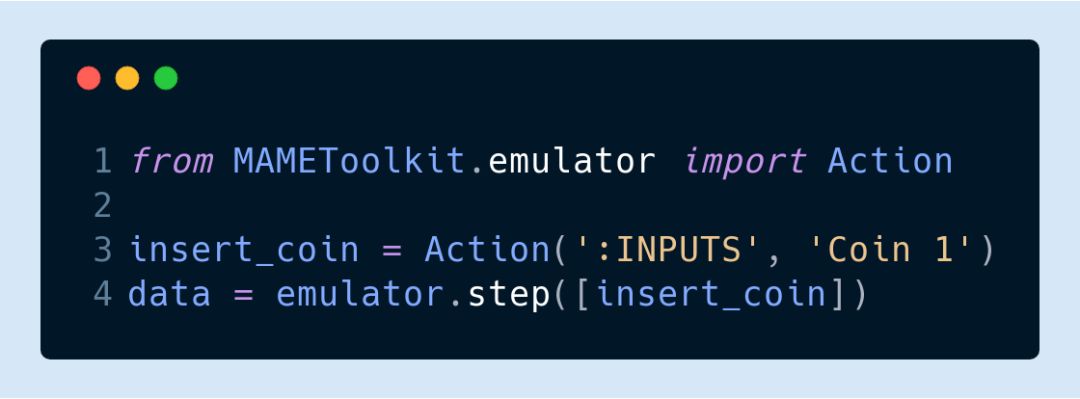

After the toolkit has been imported, you can use the step function to step through the simulation:

The step function will return the values ​​of frame and data as Numpy matrices, as well as all memory address integer values ​​for the total time step.

send input

If you want to input actions into the emulator, you also need to determine the input ports and fields supported by the game. For example, in the game Street Fighter, the following code needs to be executed to insert coins:

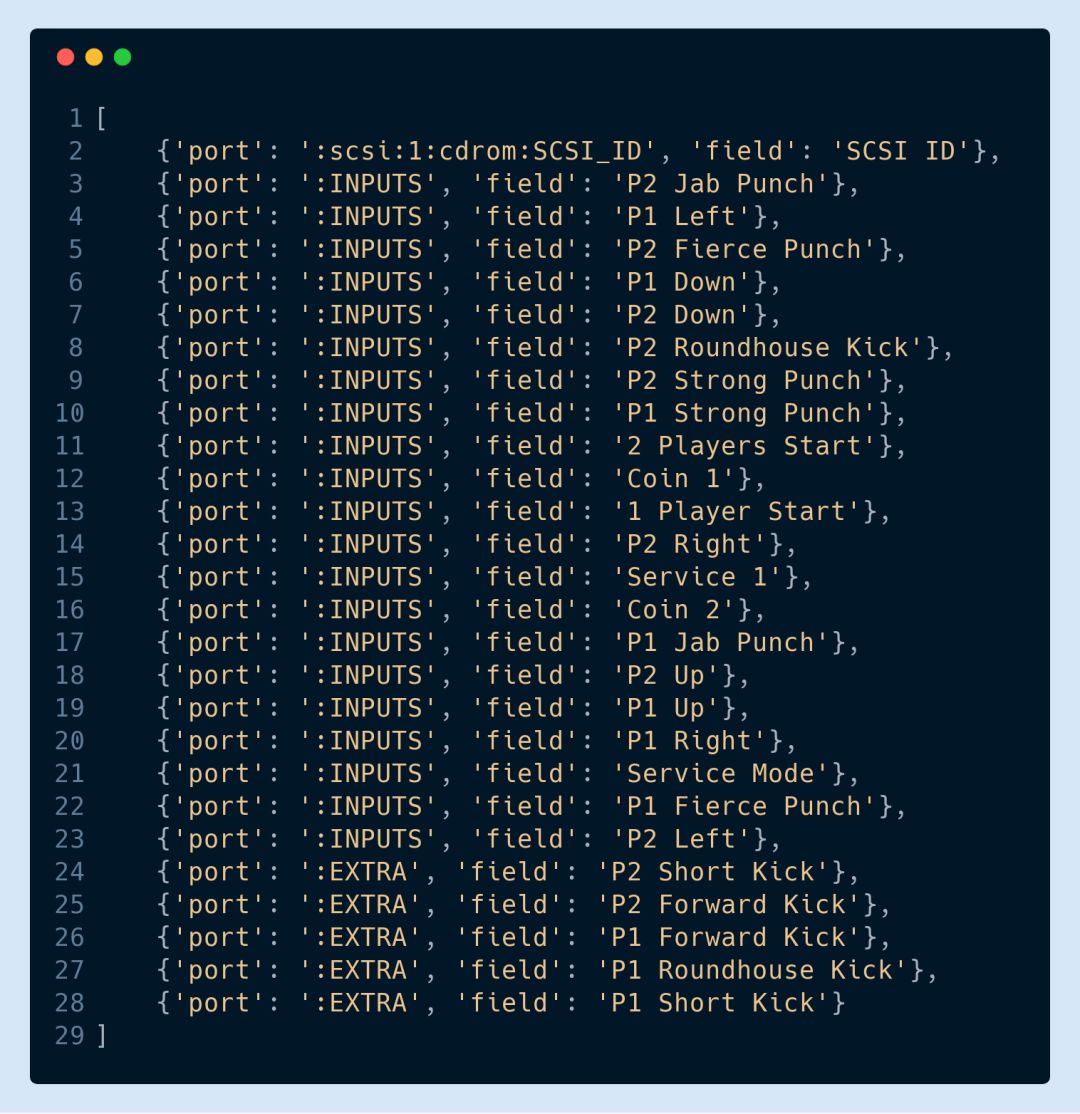

You can use the list actions command to view the supported input ports, the code is as follows:

The following returned list contains all the ports and fields in the Street Fighter game environment that can be used to send actions to the step function:

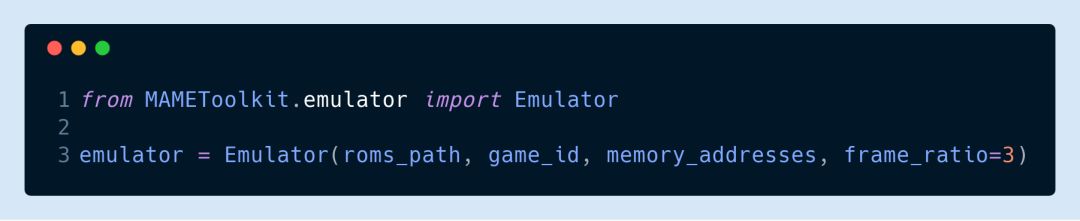

The emulator also has a frame_ratio parameter that can be used to adjust the framerate of your algorithm. At default settings, NAME can generate 60 frames per second. Of course, if you think this is too much, you can also change it to 20 frames per second with the following code:

â–ŒPerformance benchmarks

Development and testing of the kit has been completed on an 8-core AMD FX-8300 3.3GHz CPU and a 3GB GeForce GTX 1060 GPU. With a single random agent, the Street Fighter game environment can run at 600%+ of normal game speed. And if hogwild is trained with 8 random agents, the Street Fighter game environment can run at 300%+ of normal game speed.

â–ŒSimple ConvNet Agent

To ensure that the toolkit can train the algorithm, we also set up an architecture with a 5-layer ConvNet that you can use for testing with just fine-tuning. In the Street Fighter experiment, the algorithm was able to successfully learn some simple tricks in the game such as combo and blocking. The game mechanics of Street Fighter are 10 levels set from easy to difficult, and players have to fight against different opponents in each level. At the beginning, the agent can only hit the second level on average, and after 2200 training sessions, it can hit the fifth level on average. The learning rate is set by calculating the net damage and damage taken by the agent in each round.

Car Tire Pressure Gauge,Analog Pressure Gauge,Exhaust Back Pressure Gauge,Copper Tube Manometer

ZHOUSHAN JIAERLING METER CO.,LTD , https://www.zsjrlmeter.com