As data generation from cloud-based services and machine-to-machine communication grows exponentially, data centers face challenges.

This growth has not slowed down, with industry experts predicting that internal data center machine-to-machine traffic will exceed multiple orders of magnitude for all other types of traffic. This significant growth brings three major challenges to the data center.

Three major challenges

Challenge 1: Data Speed ​​The time required to receive and process data enhances the ability to receive and process data for high-speed transmission. This allows the data center to support near real-time performance.

Challenge 2: Data types From structured data such as images and video to unstructured data such as sensors and log data, data in different formats can be transferred.

Challenge 3: The amount of data provided by all users.

For many applications, addressing these challenges requires direct communication between data centers. For example, provide indexing, analysis, data synchronization, backup and recovery services. To support communication between data centers, very large data pipes are required, and the network used to transfer data between these pipes is often referred to as Data Center Interconnect (DCI).

DCI plays a pivotal role in scaling data center deployments and supporting more data centers to roll out services within a given geographic area. Of course, as the number of data centers increases, so does the degree of interconnection between them.

To implement DCI in a data center, you can use either a dedicated interface box or a traditional transport device. A dedicated interface box provides an interface between the external data center (line side) and the data center internal network (client side).

Data security is critical because sensitive information is stored in the data center, such as financial information, health information, and other critical information about the business. Security breaches can cause data center owners to lose confidence and trust and cause lost revenue. Worst of all, if the security breach is significant, it can also have legal or regulatory consequences that affect operations.

Therefore, information security is a top priority, both in the data center and in the data center. This requires the DCI implementation to support encryption or decryption of data as it moves in and out of the data center.

The current DCI implementation uses one of the following technologies:

Bulk Layer 1 security scheme: Encrypt and authenticate the entire content with technology similar to AES256. This is by far the most cost-effective way to provide security for large point-to-point data pipelines.

MACsec defined by IEEE 802.1 AE: Packets can be individually encrypted or handled easily in hardware. MACsec provides security in Layer 2.

In a DCI Interconnect Box, only one of two security technologies is typically used. However, as the number of data centers increases, it is necessary to find a solution that implements these two security methods, allowing flexible communication between data centers using different security methods. This requires the DCI platform to be flexible and easy to configure, from supporting one security solution to supporting another security solution. With this flexibility, data can be communicated between data centers using different vendor technologies.

Despite the rapid increase in the number of new data centers, existing data centers are deployed on both the line side and the customer side, so new standards are also adopted. DCI interconnect boxes must be flexible enough to handle multiple upgrade cycles across multiple generations of network interfaces. The cost associated with upgrading network equipment is part of the driving force behind this cross-generation upgrade. For example, the cost of a 100Gbps transmission card is 100 times the cost of a similar switching port. Therefore, from a cost perspective, it is not cost-effective to replace these devices every three years. This feature helps data center operators take the DCI Interconnect Box out of the upgrade cycle.

DCI Interconnect Box Architecture

Due to the intermittent support of different security technologies and regular upgrade cycles, the DCI Interconnect Box architecture needs to be able to adapt to the deployment functional requirements while implementing a simple evolution based on technology and standard changes.

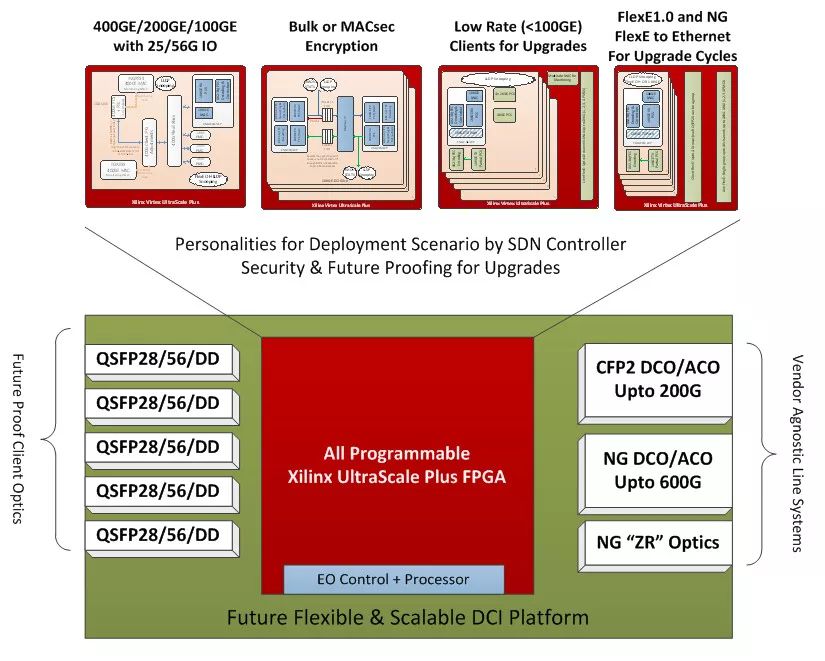

Figure 1 DCI Interconnect Box Scenario

To achieve adaptability to standards and support evolution, there is a need for an architecture that supports multiple digital coherent optical (DCO) line-side interfaces.

Increasingly, the DCO format is deployed in a pluggable format, and the features of the line side interfaces of different vendors are supported to achieve maximum flexibility.

The client-side interface needs to support Ethernet rates from 10GE to 400GE, as well as update standards like FlexE.

To connect the customer side to the line side, the solution required not only provides interface functionality, but also the security solution required for the application.

Programmable logic such as Xilinx UltraScale+? FPGAs offers DCI interconnect box designers a number of advantages. The highly flexible performance of Programmable IO enables interface functions from any system to any system, allowing the client side and line side interfaces to have the necessary PHY support.

The parallel nature of the programmable logic also enables the pipeline of the algorithm to achieve optimal throughput. Thanks to the parallel nature of the programmable logic architecture, the solution is also more deterministic because it eliminates traditional system bottlenecks.

In addition, programmable logic can also be field upgraded to support the deployment of new protocol revisions when standards are adopted. This scalable capability of programmable logic allows the DCI box to have the features required for the application. This change can be programmed by the SDN controller, so the FPGA-based DCI box is extremely malleable. In today's SDN-controlled network environment, such an application-based DCI box feature can be a huge advantage.

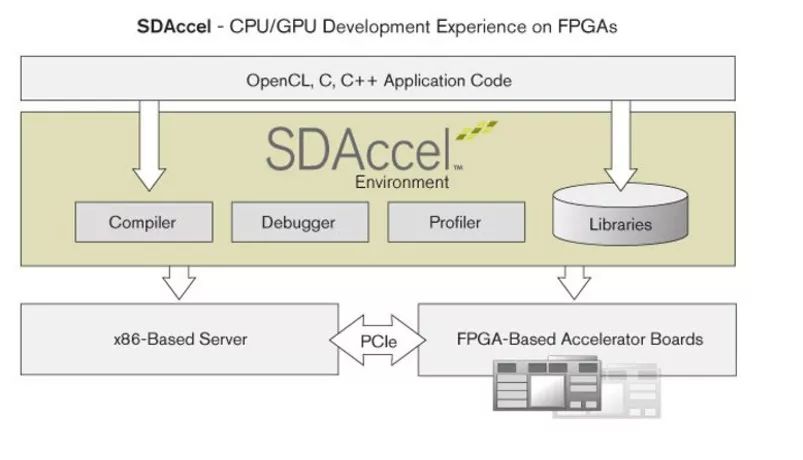

Figure 2 SDx development environment

For the development of FPGA applications, there are a number of advanced software-defined environments that can be used to accelerate functionality. They include the SDAccel?, SDNet? and SDSoC? design environments, collectively known as SDx. These environments support the development of FPGA applications using high-level synthesis. When combined with the Reconfigurable Acceleration Stack, developers can integrate data centers with libraries using industry-standard frameworks.

to sum up

Data centers are experiencing significant growth and are becoming more and more interconnected by using technologies such as DCI. The DCI Interconnect Box provides security for interconnect functions and data transactions, while supporting path upgrades while DCI and data center functions and standards evolve.

FPGAs, such as the Xilinx UltraScale+ family, offer flexible, high-performance solutions and accelerate development of the solution using the SDx toolchain to provide an advanced design environment.

High Power Eel Chip,Edge-Emitting Lasers Chip ,Edge Emitting Transistor Laser ,Edge Emitting Led Strip Light

AcePhotonics Co.,Ltd. , https://www.acephotonics.com