This is an ordinary morning in 1998.

At work, the boss called Zhang Dafao into the office and was drinking while comfortably drinking tea: “Large fat, this website developed by our company is now getting slower and slower?â€

Fortunately, Zhang Dafao also noticed this problem. He was already prepared and said helplessly: "Hey, I checked the system yesterday. Now the traffic is getting bigger and bigger, whether CPU, hard disk, or memory. Overwhelmed, the peak response time is getting slower and slower."

Suddenly, he asked tentatively, "Boss, can you buy a good machine? Replace it with the old 'broken' server. I've heard that IBM's servers are very good and have strong performance. station?"

(The yard turns over: this is called Vertical Scale Up)

"Well, you are very good. Do you know how expensive the machine is?! Our small company can't afford it!" The owner of the door immediately denied it.

"This..." Big fat meant that the donkey was poor.

"You will discuss with the CTO Bill and come up with a plan tomorrow."

The boss does not matter the process, as long as the result.

1 Hide Real Server

Going to find Bill.

He communicated the boss’s instructions with emotion.

Bill laughed: “I’ve been thinking about this recently and I’d like to discuss it with you to see if I can buy a few cheap servers, deploy a few more systems, and scale out.â€

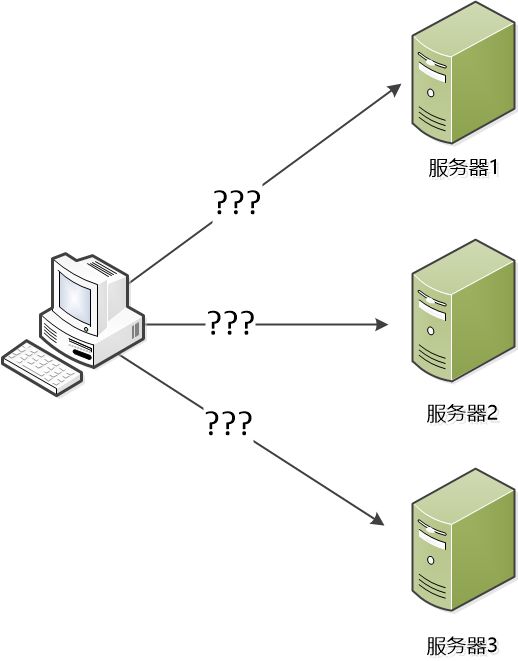

Horizontal expansion? Zhang Dafei thought that if the system is deployed on several servers, the user's access requests can be distributed to each server, and the pressure on a single server is much less.

"However," Zhang Dafa asked. "There are more machines. Each machine has an IP. The user may be confused. Which one to visit?"

"You certainly can't expose these servers. From the customer's point of view, it's best to have only one server," said Bill.

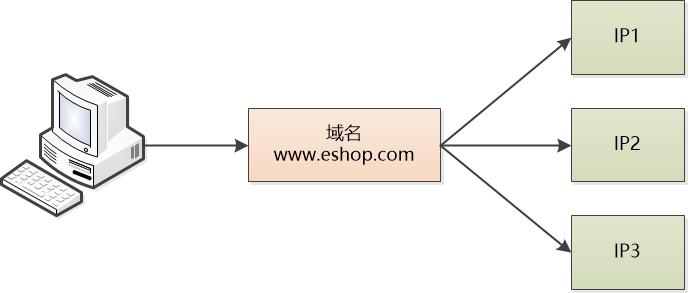

Zhang Fat was bright and suddenly he had an idea: "With! We have a middle layer, right, that is, DNS, we can set it up and let our website's domain name map to the IP of multiple servers. The user is facing The domain name of our system, then we can use a polling method. When user 1's machine does domain name resolution, DNS returns IP1. When user 2's machine does domain name resolution, DNS returns IP2... Isn't it possible to achieve a balanced load on each machine?"

Bill thinks for a moment and finds a loophole: "This has a very big problem. Because DNS has a cache in this layered system, the client's machine also has a cache. If a machine fails, the domain name resolution will still return the problem. The IP of the machine will cause problems for all users who access the machine. Even if we remove the machine's IP from the DNS, it will be troublesome."

Zhang Dapeng really did not think of the problem brought about by this cache. He scratched his head: "It would be difficult to handle."

2 steals day

"How about if we develop a software for load balancing ourselves?" Bill took another path.

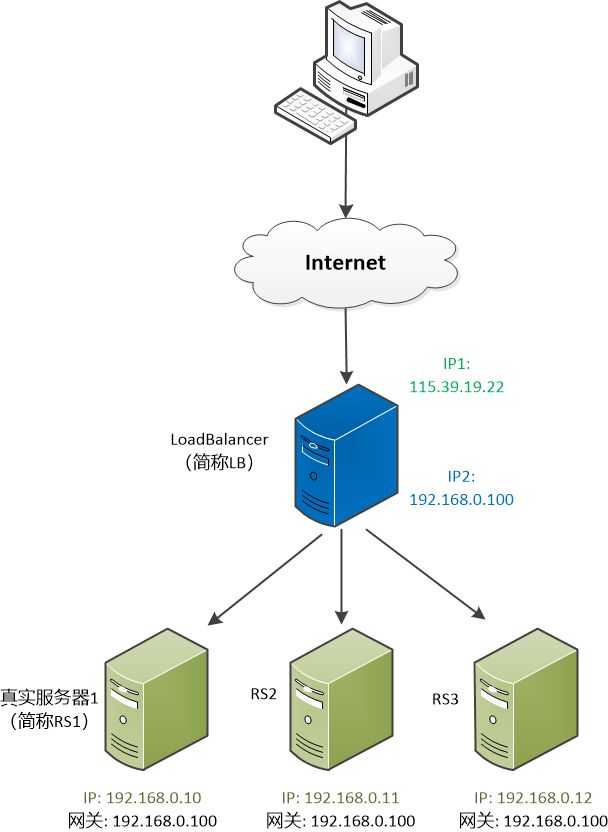

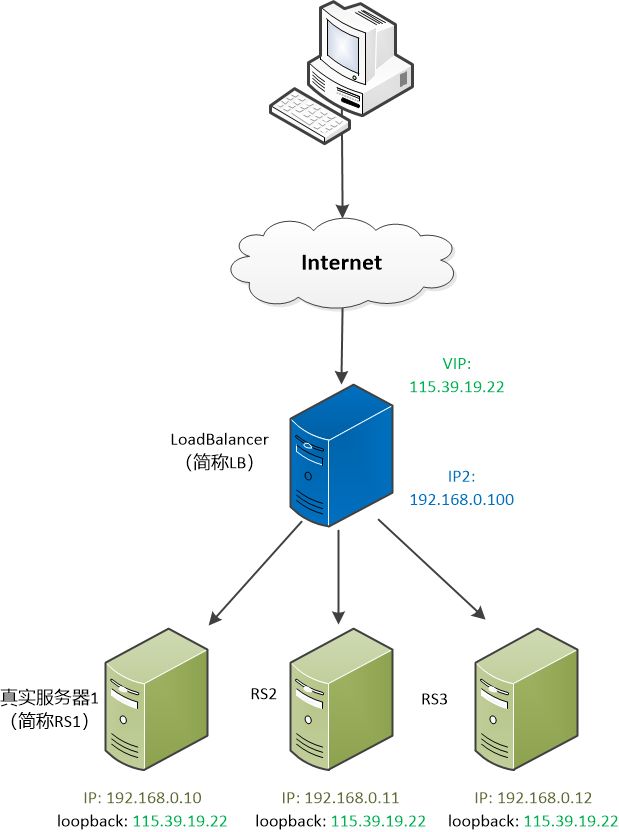

In order to show his thoughts, he drew a picture on the whiteboard. “Looking at the blue server in the middle, we can call it Load Balancer (abbreviated as LB). The user’s request is sent to him, and then it Send it to each server."

Zhang Dafeng carefully examined this picture.

Load Balancer, referred to as LB, has two IPs, one external (115.39.19.22) and one internal (192.168.0.100). The user sees that external IP. There are three servers behind the real service, called RS1, RS2, and RS3. Their gateways all point to LB.

"But how do you forward the request? Well, what exactly is the user's request?" Zhang was fat.

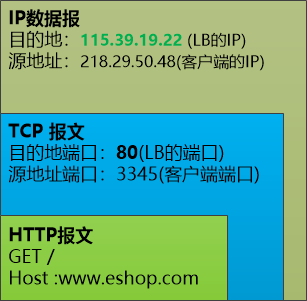

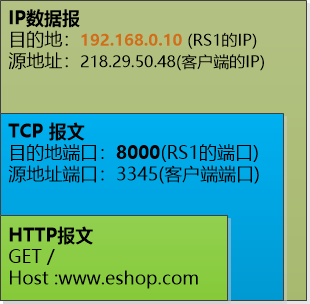

"You forgot your computer network? Is it the packet sent by the user! You look at this layer encapsulated data packet, the user made a HTTP request, wants to visit the homepage of our website, this HTTP request is put To a TCP packet, it is placed in an IP datagram. The final destination is our Load Balancer (115.39.19.22)."

(Note: The packet sent by the client to LB does not show the data link layer frame)

"But this packet is sent to Load Balancer. How do you send it to the back server?"

Bill said: "You can steal day and night, such as Load Balancer wants to send this data packet to RS1 (192.168.0.10), you can do something, change the data packet to this, then the IP data packet can be forwarded to RS1 to handle It's up."

(The LB moved his hands to change the destination IP and port to RS1)

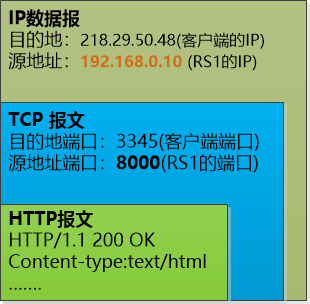

"RS1 is finished processing. To return to the homepage's HTML, we must also encapsulate the HTTP message layer:" Zhang Dafei understood what happened:

(RS1 processing is finished, send the result to the client)

"Because LB is a gateway, it will also receive this data packet, and it will be able to display it again, replace the source address and source port with its own, and send it to the customer."

(The LB starts up again and changes the source address and port to its own, leaving the client unaware of it)

Zhang Dafa summarized the flow of data:

Client-->Load Balancer-->RS-->Load Balancer--> Client

He said with excitement: "This is a wonderful move. The client simply does not feel that there are several servers behind it. It always thought that only Load Balancer was working."

Bill is thinking about how Load Balancer can select the real servers behind. There are many strategies. He writes on the whiteboard:

Polling: This is the simplest, that is, one rotation.

Weighted polling: In order to deal with some of the server performance is good, can make them a higher weight, the probability of being selected is a bit larger.

Minimum connection: Which server handles fewer connections and sends it to whom.

Weighted minimum connection: on the basis of least connection, plus weight

......

There are also other algorithms and strategies that I will slowly think of later.

3 4 or 7?

Zhang Dafei thinks of another problem: For a user's request, it may be divided into multiple data packets to send, if these data packets are sent to different machines by our Load Balancer, then it is completely indiscriminate. ! He told Bill about his ideas.

Bill said: "This problem is very good. Our Load Balancer must maintain a table. This table needs to record the actual server on which the client's packet was forwarded so that when the next packet arrives, we can Forward it to the same server."

"It seems that this load balancing software needs to be connection-oriented, that is, the fourth layer of the OSI network system, which can be called four-tier load balancing," Bill concluded.

"Since there are four levels of load balancing, is it also possible to implement a seven-tier load balance?"

"That's for sure. If our Load Balancer took out the HTTP layer message data, according to the URL, browser, language and other information, the request is distributed to the real server behind, that is, the seven-tier load balancing However, we will implement a four-storey building at this stage and say it later on in the seven storeys."

Bill told Zhang Fat to organize the human to develop this load balancing software.

Zhang Dapeng dared not neglect, due to the details of the agreement, Zhang Dafao also bought several books: "Detailed Explanation of TCP/IP" Volume I, Volume II, Volume III, with a quick review of the C language, and then began to frenzy Development.

4 separation of duties

Three months later, the first version of Load Balancer was developed. This is a piece of software that runs on Linux. The company tried it out. It feels really good. It just uses a few cheap servers to achieve load balancing.

The boss saw how much money did not solve the problem and was very satisfied. He sent a 1,000-dollar bonus to the development team where Zhang Dafei was located and organized everyone to go out and beat him up.

Zhang Dafae saw that the boss was very clever. Although he was slightly dissatisfied, he thought that through the development of this software, he had learned a lot of low-level knowledge, especially the TCP protocol, and he also tolerated it.

However, it did not last long. Zhang Fat found that this Load Balancer bottleneck exists: All traffic must pass through it. It needs to modify the packets sent by customers, and also modify the packets sent to customers.

Another great feature of network access is that request packets are short and response packets often contain large amounts of data. This is easy to understand, an HTTP GET request is very short, but the returned HTML is extremely long -- this further exacerbates Load Balancer's task of modifying packets.

Dabby quickly went to Bill, Bill said: "This is indeed a problem, we separate the request and response, let Load Balancer only handle the request, so that each server sends the response directly to the client, so that the bottleneck is not eliminated? ?"

"How to deal with separately?"

"First, let all the servers have the same IP. Let's call it VIP (115.39.19.22 in the picture)."

Zhang Dafei has accumulated rich experience through the development of the first version of Load Balancer.

He asked: "You are binding the loopback of each real server to that VIP, but there is a problem, so many servers have the same IP, when the IP packet comes, which server should be deal with?"

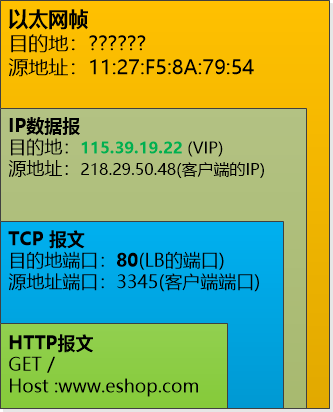

"Note that IP packets are actually sent over the data link layer. You look at this picture."

Zhang Faten saw that the client's HTTP packet was encapsulated again in the reservoir TCP packet, the port number was 80, and then the destination in the IP datagram was 115.39.19.22 (VIP).

The question mark in the figure is the MAC address of the destination. How can I get it?

Yes, the ARP protocol is used to broadcast an IP address (115.39.19.22), and then the IP machine will reply to its own MAC address. But now there are several machines with the same IP (115.39.19.22). What should I do?

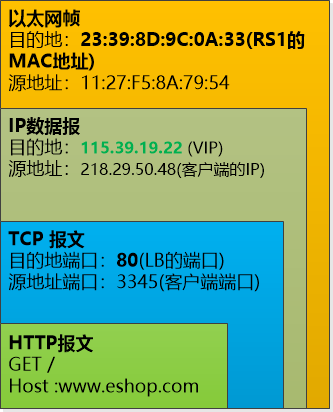

Bill said: "We only let Load Balancer respond to the ARP request for this VIP address (115.39.19.22). For RS1, RS2, and RS3, suppress the ARP response to this VIP address. Can we uniquely determine Load Balancer?"

This is the case! Zhang fat suddenly realized.

Since Load Balancer gets this IP packet, it can use a policy to select a server from RS1, RS2, and RS3, such as RS1 (192.168.0.10), and leave the IP datagram intact, encapsulating it into a data link layer. The packet (the destination is the RS1's MAC address) can be forwarded directly.

RS1 (192.168.0.10) This server received the packet, opened it, and the destination IP was 115.39.19.22, which was its own IP, and it could be processed.

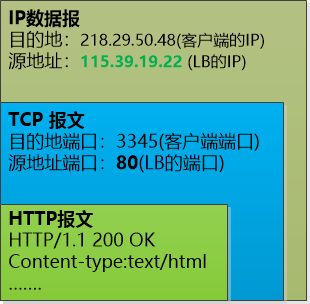

After processing, RS1 can directly send back to the client, completely without using Load Balancer. Because your address is 115.39.19.22.

For the client, it still sees the unique address 115.39.19.22 and does not know what happened in the background.

Bill added: "Because Load Balancer will not modify the IP datagram at all, the TCP port number will not be modified. This requires that the port numbers on RS1, RS2, and RS3 must be consistent with Load Balancer."

As before, Zhang Dafa summarized the flow of data:

Client-->Load Balancer--> RS --> Client

Bill said: "How about this? Is this okay?"

Zhang Fat thought for a moment that this approach seemed to be flawless and efficient, and that Load Balancer was only responsible for sending user requests to a specific server and everything would be fine. The rest was handled by the specific server and it had nothing to do with it. .

He said happily: "Yes, I set out to bring people to achieve it."

Postscript: What this article describes is actually the principle of the well-known open source software LVS. The two load balancing methods discussed above are LVS NAT and DR.

LVS is a free software project established by Dr. Zhang Wenzhao in May 1998 and is now part of the Linux kernel. When I think about it, I was still happy to toss my web pages. It was not long before I learned to install and use Linux. Server-side development was limited to ASP. The concept of load balancing like LVS had never been heard before.

The programming language can be learned and the gap can be made up. However, the gap between this realm and vision is simply a huge gap and it is difficult to overcome!

(Finish)

"Linux Reading Field" is a professional Linux and system software technology exchange community, Linux system personnel training base, and enterprise and Linux talent connection hub.

Maximum bandwidth in the HDMI 2.0 specification is 18Gbps. This bandwidth allows for support of 4K video resolutions at a higher refresh rates with more detailed color information than previous HDMI specifications. This configuration will also support advanced audio streams.

Refresh Rate

Higher refresh rates, up to 60Hz at a 4K resolution are supported within the HDMI 2.0 specification. This helps to reduce motion blur and lag and provides sufficient bandwidth for high dynamic range (HDR) and deep color content.

Chroma Subsampling

4:4:4 chroma subsampling per the HDMI 2.0 specification means that colors can be displayed uncompressed and in full resolution. Compliance with a 4:4:4 color spec is especially important when displaying content from computers and laptops.

Color Bit Depth

Color bit depth has increased to 12-bit deep color with 4,096 shades per channel outlined within the HDMI 2.0 specification. This allows for smoother gradients in displayed images than previous HDMI Specifications.

High Dynamic Range (HDR)

HDR expands the range of both contrast and color allowing images to achieve greater levels of detail in both bright and dark sections of the image. HDMI 2.0 was the first HDMI specification to support this feature.

Optimized Audio Performance

Support for advanced audio features such as DTS-HD, Dolby TrueHD & Dolby ATMOS allow for superior audio performance versus previous HDMI specifications.

Hdmi 2.0 Cable,Hdmi 2.0 B Cable,Best Hdmi 2.0 Cable,Micro Hdmi 2.0

UCOAX , https://www.jsucoax.com