Although virtual reality (VR) and augmented reality (AR) technologies are futuristic, they are not new. In the 1990s, VR technology was booming with the explosion of three-dimensional display technology, and it was paid attention to by many fields. In 1995, Nintendo of Japan released the famous revolutionary VR game product "Virtual Boy", which instantly became one of the most concerned technology products of the year. However, due to problems such as unsatisfactory image quality, expensive product prices, large time delays, and insufficient ecological content, none of the early VR products achieved significant results, and the first VR wave also turned into a trough. After entering the 21st century, computer software and hardware technologies have been greatly developed, and computer performance has been sufficient to support VR/AR products with higher graphics quality and smaller time delays. After Apple's iPhone was released in 2008, the smartphone industry has achieved unprecedented development. The development of the smartphone industry has promoted the decline in the price, size and performance of display devices and sensors, laying a solid technical foundation for the popularization of VR/AR products.

In April 2012, Brin, the co-founder of Google, wore Google Glass at an airborne press conference, announcing the official appearance of Google Glass. As one of the most concerned technology products in 2012, Google Glass successfully launched a new round of VR boom. Subsequently, other technology giants also quickly followed up and actively devoted themselves to the research and development of VR technology, and on this basis brought technologies such as AR and mixed reality (MR) into the track of rapid development. With the rapid development of VR/AR technology, the use scenarios of VR/AR products have expanded to many fields, such as video, games, engineering, military, education, medical care, real estate and retail. Many companies have taken advantage of the development of VR/AR and have achieved great success in terms of brand effect and capital investment.

Due to the wide application scenarios and huge market value of VR/AR, it has received common attention from consumers and the industry, and is considered to be the most likely to become the "next generation computing platform" after personal computers and smartphones. In order to keep up with the development of the times and gain a firm foothold before the arrival of the "next-generation platform", leading companies in various industries are taking active actions to gain a place in the VR/AR industry.

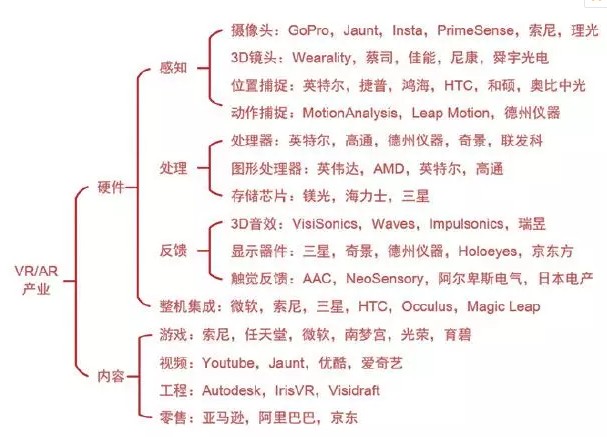

In general, the current VR/AR industry layout can be divided into two parts: hardware and content. Among them, the hardware part can be divided into four major sections: perception, processing, feedback and machine integration, and the content part covers many aspects such as video, games, and live broadcast. Figure 1 shows the current industrial layout of VR/AR and representative companies in each major field. In the field of hardware, the United States, South Korea and Taiwan are the leaders; in the field of content, the United States, Japan and China are slightly ahead.

Figure 1 Industrial layout of international companies in the VR/AR industry

Virtual reality and augmented reality industry development forecast

At present, although the layout of the VR/AR industry is relatively complete, both in terms of technology and the market, compared to the PC and smartphone industries, the VR/AR industry is only at the initial stage of development. "Elementary" is mainly reflected in four aspects: high hardware cost, high product price, low content quality and small market capacity. With the advancement of technology, the reduction of costs and the further enrichment of content, the VR/AR industry will surely usher in greater development in the future. According to the US Goldman Sachs Group, by 2025, the value created by the VR/AR industry will reach US$80 billion each year. Among them, the value created by the hardware field is close to 45 billion U.S. dollars, mainly concentrated in four aspects: head mount display (HMD), processor, tracking system and tactile feedback; the value created by the software field is about 35 billion U.S. dollars. Mainly concentrated in the fields of games, video and live broadcast (Figure 2). At present, China needs to keep abreast of the development trend of the VR/AR industry, keep up with the pace of the industry, and increase industrial investment in order to maintain the healthy and long-term development of the VR/AR industry with independent intellectual property rights.

Display technology in virtual reality and augmented reality products

The difference between virtual reality and augmented reality

To understand the current technical solutions used in mainstream VR/AR products, it is necessary to distinguish between VR/AR. VR products can put users in another world different from reality. This world can be a virtual world created entirely by computers (such as scenes in games and movies), or it can be a real world that is not in front of you (such as live sports). AR products allow users to see the real scene in front of them, while at the same time seeing some things that do not exist in the real world. Generally speaking, VR products have opaque screens, while AR products use light-permeable screens.

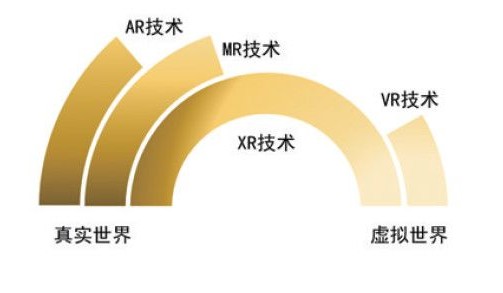

In addition to the VR/AR concept, many new related concepts have emerged in recent years, such as mixed reality (MR) and extended reality (XR). For example, Microsoft will independently develop its own headset product Hololens It is called an MR product, and it is not classified as an AR product. Essentially, the MR product is a higher form of AR product that allows users to interact with virtual information in the real world, thereby bringing the displayed content closer to reality. XR is another brand-new concept proposed by Qualcomm of the United States. It is believed that XR technology covers VR, AR and MR technologies. Users can choose the degree of integration between the real world and the virtual world according to their own wishes. Switch freely. In principle, these new concepts can all be classified in the VR/AR concept (Figure 4). It is foreseeable that with the development of technology, VR/AR technology will merge with each other in the future, and devices will be able to intelligently switch from one mode to another. Therefore, this article will not distinguish these concepts in detail, but will use VR /AR description.

Mainstream virtual reality and augmented reality product solutions

1. VR/AR products based on binocular vision

Binocular vision VR products represented by a variety of head-mounted VR products such as DaydreamVR from Google Inc., Gear VR from Samsung Electronics, HTC Vive from HTC Group, PlayStaTIon VR from Sony Electronics, and Oculus Rift from Oculus, are currently the most The main purpose of mainstream VR display systems is to create an immersive three-dimensional experience. The principle is shown in Figure 5. There are two main implementation methods. Figure 5 (a) is the Fresnel lens solution, which is currently the most common solution. Two screens (or the left and right parts of a screen) in VR products display two slightly different images, which reach the human eye after passing through a Fresnel lens. Two slightly different images are processed by the brain and then merged, giving people a three-dimensional immersive feeling. Figure 5(b) is a cylindrical lens solution, which is not very common in common VR products. After the image to be displayed is processed, different images corresponding to the left and right viewing angles are obtained. The obtained images are arranged in a certain pattern on the display screen, so that the corresponding images of the left and right perspectives enter the human eyes respectively, and are processed by the brain and merged to obtain a three-dimensional experience.

The basic principles of binocular vision AR products represented by Microsoft Hololens and Atheer are very similar to those of binocular vision VR products. The difference is that when the binocular vision principle is applied to AR products, it is necessary to ensure that the human eye can see the external environment while seeing the image displayed on the display. In order to achieve this goal, the light path can be designed so that the screen for displaying the virtual environment and the window for observing the real environment do not overlap with each other, or a transparent display device can be used directly so that both the virtual scene and the real environment can pass through the display device. Figure 6 shows an AR product that uses waveguide devices and holographic optical elements (HOE). The microdisplay loads the image to be displayed, is coupled into the waveguide through the free-form surface element, and propagates to the holographic optical element. The holographic optical element can not only let the external ambient light enter the human eye, but also diffract the light incident through the waveguide into the human eye, and finally realize the superposition of the virtual scene and the real scene.

In order to obtain real-life motion parallax effects and occlusion effects, and make VR/AR experience more realistic, VR/AR products based on binocular vision often require the cooperation of gravity sensors and gyroscopes to be used normally. However, when using this type of product, because the convergence point of the human eye and the focus point are not together, there will inevitably be a convergence-focusing conflict (Figure 7). Wearing such products for a long time may cause dizziness, fatigue and other sensations, but the experience is not very ideal.

2. VR/AR products based on light field display technology

The VR/AR product represented by Magic Leap Company's Magic Leap One uses light field display technology, and its principle is shown in Figure 8. In order to obtain the light field information of the image to be displayed, a lens array composed of many microlenses is placed between the scene and the camera; each microlens can form a tiny picture of the image to be displayed with different azimuth and angle of view; when the microlens in the lens array The number of pixels is large enough, and the camera pixels are small enough. It can be considered that the camera records the light field information of the image to be displayed after passing through the microlens array; according to the principle of optical path reversibility, when these tiny images are loaded by the display device and pass through the lens array again, The recorded images to be displayed can be restored.

VR/AR products based on light field display technology do not require coherent light sources and can display dynamic three-dimensional images. Compared with products based on binocular vision, products based on light field display have better motion parallax and occlusion effects. However, when using general display devices, the product resolution is relatively low, and the depth range is relatively small. The innovation of Magic Leap lies in the use of a core technology called fiber opTIc projector. This "projector" is small in size and low in power consumption compared with traditional display devices, so Magic Leap One can display higher resolution images than other VR/AR products based on light field displays on the market. However, it still has not been able to completely solve the problems of resolution drop and convergence-focusing conflict.

VR/AR solution based on holographic optics

The principle of holographic technology

In order to solve the shortcomings of VR/AR product solutions based on binocular vision and light field display technology, VR/AR solutions based on holographic optics are currently receiving more attention. Holographic technology is a technology that uses interference fringes to reproduce three-dimensional objects. The amplitude and phase information in the interference fringes can be completely reconstructed in space under the illumination of the reference light. Since the reconstruction is "all information" of the object, this technique is vividly called "holography". The complete working process of holographic optics is divided into two steps: "interference recording" and "diffraction reconstruction". The principle is shown in Figure 9.

Suppose the object light wave and the reference light wave are represented by O(x,y) and R(x,y) respectively. After they interfere and superimpose on the holographic recording surface, the light intensity distribution is

I(x,y)=|O(x,y)+R(x,y)|2=O(x,y)2 +R(x,y)2 +O*(x,y)R(x ,y)+O(x,y)R*(x,y)(1)

In the formula, O(x,y)2 and R(x,y)2 only contain intensity information, but do not contain phase information. They can be regarded as constant terms and have nothing to do with the reconstruction of the image to be displayed; O*(x,y) R(x,y) and O(x,y)R*(x,y) contain both intensity information and phase information, which can convert the field distribution of the object light wave into the intensity distribution of interference fringes, which is called interference Item, will participate in the reconstruction of the image to be displayed. Among them, O(x,y)R*(x,y) compares the light field information involved in the reconstruction with the original light wave O(x,y), only the amplitude information is different, and the phase information is exactly the same, so the observer can observe This part of the light field information finds the virtual image of the image to be reconstructed; O*(x,y)R(x,y) and O(x,y)R*(x,y) are symmetrically conjugated, and the observer observes this part Light field information, you can see the real image of the object to be reconstructed.

Computational holography

In a certain holographic system, if the hologram recording and reconstruction process are realized by photosensitive materials, such a holographic system is called an optical holographic system. However, since most of the photosensitive materials such as films used in the experiment are disposable materials, they cannot be erased and written repeatedly. During the experiment, as long as the experimental platform shakes a little, it may cause the interference fringes to appear deviation, thereby affecting the accuracy of the reconstructed image. In addition, after the experiment is completed, in order to obtain the hologram, the film needs to be developed, shaped and dried, which is time-consuming and laborious. With the rapid development of computer software and hardware technology, computer technology can gradually replace the holographic dry plate to realize the recording of interference fringes. This technology is called computational holography technology and was proposed by Kozma et al. in 1965. The advantages of computational holography are mainly reflected in three aspects: first, the hologram is generated by a computer, which avoids the influence of the experimental environment and experimental operation factors on the quality of the hologram during the hologram recording process; second, the generated hologram can be stored as various Compared with optical holography, it is easier to copy, spread and carry the hologram, and it can be reproduced by a computer at any time to verify the correctness of the hologram; third, computational holography can record actual objects or pass Three-dimensional modeling software such as Auto CAD and SolidWorks records objects that do not actually exist, and has a high degree of freedom in the selection of objects to be reconstructed. In the diffraction reconstruction process, in order to load the hologram, the computational holography technology often needs to be used with the spatial light modulator.

A schematic diagram of a computational holography device used in a VR/AR system is shown in Figure 10. The hologram of the virtual scene to be displayed is uploaded to the spatial light modulator via the driving device. When the reference light illuminates the aerial light modulator, the diffracted light field can reach the human eye through the beam splitter. The external real environment enters the human eye through the other light direction of the beam splitter.

The three-dimensional display solution based on holographic technology has a compact system, no crosstalk and depth reversal, and no mechanical motion parts. It is currently an ideal VR/AR solution. Table 1 shows the three-dimensional visual perception information that can be provided by the binocular vision scheme, the light field display scheme, and the holographic optical scheme. It can be seen that the holographic optical VR/AR solution can provide all types of three-dimensional visual perception, and there is no convergence-focusing conflict. It has a very good viewing experience and is currently one of the most recognized VR/AR solutions.

New development of VR/AR based on computational holography technology

Due to the limited spatial bandwidth product of the existing technology of using spatial light modulators for holographic display, the size of the presented image and the viewing angle of observation are both small, and the computing power of the current hardware can only support smaller or smaller sizes. Real-time holographic display of viewing angle. In contrast, the combination of holography and near-eye display only displays corresponding information in the field of view of the eye, which can improve optical reconstruction and reduce the burden of calculation, and improve the utilization of information.

In 2014, Moon and others at Kyungpook National University in South Korea proposed a model of a color holographic near-eye display system using LED as a light source and proved its feasibility. The system is a binocular system composed of two monocular systems. In order to make the structural design more compact, the LED light source is coupled to the multimode fiber and transmitted to the display module. Except for the light source, the light also needs to pass through the spatial light modulator and the Fourier The inner filter and the eyepieces finally form an image in front of the observer's eyes (Figure 11).

In 2015, Chen et al. of the University of Cambridge proposed the use of tomography for computational hologram calculations, and used the concept of a head-mounted display to verify the computational efficiency of the new algorithm and the interactive function of displaying images, and set up a simulated light path for experiments. The principle of this system is shown in Figure 12. The computed hologram displayed by the spatial light modulator is imaged at the pupil position of the human eye via the 4f system. In the actual experiment, an object was placed between the two lenses to eliminate the zero-order diffracted light and prevent it from focusing on the wrong position to damage the eyes, thereby ensuring the safety of the observer's eyes.

In 2017, the perspective three-dimensional near-eye display system proposed by the Liu Juan team of Beijing Institute of Technology uses holographic gratings as frequency filters to improve the quality of the displayed images. The grating filter is actually used as an additive filter in the frequency domain in the 4f system. The input plane in the 4f system is two holograms uploaded to the spatial light modulator at a certain interval. When the distance between the two holograms and When the period of the grating satisfies certain conditions, a composite field generated by two holograms can be obtained on the output plane of the 4f system. After the reconstructed wavefront is transmitted for a certain distance, a higher-quality reconstructed image can be obtained (Figure 13). The team produced this near-eye display device and conducted a series of experiments to prove that the system can generate three-dimensional images with depth information, and can be worn and observed.

In 2017, Maimone of Cambridge University Microsoft Research and others proposed virtual reality and augmented reality technology based on phase-type holographic display. In the expansion of the existing technology, a color holographic near-eye display with zoom and large field of view is attempted. The advantages of this technology in the display of high-resolution color images, single-pixel zoom control, and expanded field of view are verified by experiments. Figure 14 shows the experimental light path and experimental results for verifying the large field of view and zoomable system.

Challenges faced by holographic optical VR/AR solutions

Hologram generation algorithm

VR/AR systems based on holographic optics have to face many challenges in both algorithms and hardware. Among them, in the field of algorithms, speeding up the generation of computational holograms is the first important issue to face.

Point source method and panel method are the most basic algorithms for generating computational holograms. The specific method is that the three-dimensional object is divided into many points or plane primitives, which provide accurate geometric information of the three-dimensional scene. However, the amount of 3D scene information that needs to be displayed in the VR/AR system is huge. If the point source method or the panel method is used to generate the computed hologram, it will consume a lot of computing time, and the requirements for computer hardware performance are also very demanding. In 1995, Lucente proposed the look-up table (LUT) method. The method first calculated the interference fringes formed by each point light source in the object space on the target plane, and then stored the fringe results in the look-up table. When performing holographic recording of an object, only the points in the object space and the results in the table need to be matched and superimposed, which saves the process of repeating calculations again and again and speeds up the generation of computational holograms. However, the look-up table method requires too high a computer's storage capacity and read-write performance. Subsequently, Tomoyoshi, Kim, and Nishitsuji improved the table look-up method from different angles and improved the efficiency of table look-up operations. In addition, the rapid development of hardware acceleration technologies such as OpenCL, FPGA, distributed parallel processing and array computing in recent years has also created conditions for the speed increase of the point source method. It can be seen from the existing research results that the calculation speed of the point source method after hardware acceleration is greatly improved. At the same time, these hardware acceleration algorithms have become increasingly demanding on computer hardware.

In order to solve the calculation speed problem of the point source method and the panel method and the dependence of the look-up table method on the hardware, Trester et al. proposed a tomography method. This algorithm first needs to obtain a series of plane information from the reconstructed object in the space. The obtained plane information is sequentially Fourier transformed to obtain a computed hologram. Subsequently, Sando et al., Bayraktar et al., Chen et al., and Zhao et al. of Tsinghua University improved the tomography method from different aspects, and solved the problem of huge amount of calculation and slow calculation speed in the process of generating CGH. However, most computational hologram generation algorithms based on tomography have a paraxial approximation, and the quality of optical reconstruction at close range will be reduced, and the calculation error will be more serious in a large numerical aperture system. In addition, the sampling interval on the 3D scene plane is different from the sampling interval on the hologram plane, and the sampling interval is related to the calculated distance and wavelength. Many algorithms have been proposed to solve the problem of sampling interval, such as Fresnel convolution algorithm, moving Fresnel algorithm and multi-step Fresnel algorithm, but these algorithms increase the amount of calculation.

The stereoscopic perspective method used by Li et al. and Shaked et al. is another solution for calculating the three-dimensional scene computational hologram. The characteristic of this algorithm is that the principle of multi-view projection is used in the acquisition of three-dimensional scenes. This method uses a digital camera or a computer to obtain projections from various angles of a three-dimensional scene, and adds an angle offset to a series of obtained two-dimensional images to reflect the position information of the two-dimensional images. Then, the fast Fourier transform is performed on the above-mentioned two-dimensional image with angular offset, superimposed and encoded on the holographic surface, and finally a three-dimensional scene computed hologram is obtained. The process of obtaining projection images from various angles by the stereo perspective method is convenient and fast, and the calculation process is relatively simple, which is suitable for hologram calculation of virtual objects. However, when obtaining the projection information of the actual object in various directions, the coordinated use of the lens array is often required, resulting in a small projection angle range. Therefore, this method cannot be used for the reconstruction of large scene objects.

Spatial bandwidth product expansion

For the VR/AR system based on the holographic principle, the image display effect is still largely restricted by the characteristics of the spatial light modulator. The most prominent problem is that the total number of pixels of the spatial light modulator determines the spatial bandwidth product of the display system. , Limiting the total amount of data that the system can present, thereby affecting the resolution of the three-dimensional image. In order to increase the display effect of the system, researchers generally use spatial light modulator splicing technology to expand the spatial bandwidth product of the display system. In 2008, Seoul National University Hahn and others used spatial light modulator arrays to expand the spatial bandwidth product of the display system and expand the viewing angle of the display; in 2013, Singapore data storage center Lum et al. used 8&TImes;3 spatial light modulator arrays , Extend the total number of pixels of the hologram to 37×107 pixels. Although the spatial light modulator splicing technology can effectively increase the data volume of the display system and obtain high-quality dynamic 3D scene reconstruction effects, the array system is often complex in structure and expensive. Therefore, the use of spatial light modulator arrays to achieve three-dimensional display still faces cost and technical bottlenecks.

In addition to the splicing technology of spatial light modulators, the emergence of new material technologies provides new ideas for expanding the spatial bandwidth product of VR/AR systems based on the principle of holographic optics. In 2008, Tay et al. published the research progress of the University of Arizona in the field of holographic three-dimensional real-time display in "Nature", and realized a large-size refreshable photorefractive polymer holographic display screen. In 2013, Smalley et al. published in "Nature" "A Novel Spatial Light Modulator Based on Anisotropic Leakage Mode Coupling", which overcomes the functional limitations of existing spatial light modulators and realizes functions such as polarization selection and diffraction angle expansion. In 2015, Gu et al. published in "Nature Communications" "Wide viewing angle full-color three-dimensional display technology based on graphene materials", which can realize sub-wavelength scale multi-wavelength optical wavefront control

In 2016, Wang et al. proposed a holographic display based on a metasurface. The core material in the device can be used as a phase modulation medium. When the temperature is between the melting point temperature and the glass transition temperature, the normal Ge2Sb2Te5 (GST) material will change from an amorphous state to a crystalline state. Under such temperature conditions, if a short-pulse high-energy femtosecond laser irradiates the GST material, the irradiated pixels will correspondingly transform back to the amorphous state. The GST materials in these two states have a very obvious difference in refractive index. If the pixel refractive index is changed point by point as required, the complete hologram or three-dimensional light field information can be written into the material. Moreover, if a femtosecond laser with a stronger energy than the femtosecond laser used to write information is used to irradiate the GST material, the originally written information can be completely erased (Figure 16).

When the material GST is used for holographic three-dimensional display, it has the unparalleled advantages of existing selective laser melting (SLM): (1) The highest light energy utilization rate of the modified material can reach more than 99%; (2) Its pixel interval It can be as small as 0.59 μm, which can bring a very large diffraction angle and make the image display range wider. However, the shortcomings of this material are also obvious: (1) It requires extremely high precision in production and assembly, and small errors will significantly reduce the display quality; (2) The current refresh efficiency of GST materials is still relatively low, and it cannot be completed temporarily. Real-time three-dimensional display of resolution.

Judging from the research trend of new nanocomposite materials and new optical modulation devices in academia, the future will be an era of revolutionary materials and devices. The above-mentioned volume holographic optical technology is based on the Bragg selectivity of volume gratings, which can multiplex and record information such as wavelength, angle, and polarization. At the same time, the volume grating itself carries the phase information of the three-dimensional object, and can realize three-dimensional display through holographic reconstruction, which is expected to become the next generation of high-density three-dimensional recording and high-resolution three-dimensional display technology.

in conclusion

The holographic optical VR is analyzed from four aspects: the status quo and development trend of the VR/AR industry, the mainstream display technology solutions of VR/AR products, the combination of VR/AR technology and holographic optics, and the challenges faced by the VR/AR industry based on holographic optics. /AR product development trend, and draw the following conclusions.

1) VR/AR products are considered to be the products most likely to become the "next-generation computing platform", but there are still problems such as high hardware costs, high product prices, low content quality and small market capacity. As technology advances, prices drop, and content becomes more abundant, the VR/AR industry is bound to usher in greater development in the future.

2) Mainstream VR/AR solutions can be classified into binocular vision type and light field display type. Among them, binocular vision type VR/AR products will produce convergence-focusing conflict, which may make people feel dizzy, fatigue, etc.; light-field display type VR/AR products also have resolution degradation problems and convergence-focusing conflicts, etc. Disadvantages.

3) The three-dimensional display scheme of holographic technology has a compact system, no crosstalk and depth reversal, and no mechanical movement. It is considered to be an ideal VR/AR solution at present. Combining holography and near-eye display to display only the corresponding information in the field of view of the eye can improve optical reconstruction and reduce the burden of calculation, and improve the utilization of information.

4) In the VR/AR solution based on holographic technology, accelerating the generation of computational holograms and expanding the spatial bandwidth product of the display system are two major challenges currently faced. Improving algorithm technology and using revolutionary materials and devices will be the development trend of holographic VR/AR.

ZGAR AZ Bingo Vape

ZGAR electronic cigarette uses high-tech R&D, food grade disposable pod device and high-quality raw material. All package designs are Original IP. Our designer team is from Hong Kong. We have very high requirements for product quality, flavors taste and packaging design. The E-liquid is imported, materials are food grade, and assembly plant is medical-grade dust-free workshops.

Our products include disposable e-cigarettes, rechargeable e-cigarettes, rechargreable disposable vape pen, and various of flavors of cigarette cartridges. From 600puffs to 5000puffs, ZGAR bar Disposable offer high-tech R&D, E-cigarette improves battery capacity, We offer various of flavors and support customization. And printing designs can be customized. We have our own professional team and competitive quotations for any OEM or ODM works.

We supply OEM rechargeable disposable vape pen,OEM disposable electronic cigarette,ODM disposable vape pen,ODM disposable electronic cigarette,OEM/ODM vape pen e-cigarette,OEM/ODM atomizer device.

Disposable E-cigarette, ODM disposable electronic cigarette, vape pen atomizer , Device E-cig, OEM disposable electronic cigarette,disposable vape,zgar bingo box

ZGAR INTERNATIONAL(HK)CO., LIMITED , https://www.oemvape-pen.com