Last week, Apollo Micron held a Meetup for Apollo 2.5 in Sunnyvale. At the meeting, Apollo's team of technology researchers shared with Apollo 2.5 in-depth technology with the many students and auto-driver enthusiasts from Silicon Valley. Domestic developers who are not able to reach the site can review related technical dry goods through the following video materials.

Apollo 2.5 Platform Overview

Jingao Wang

First of all, Apollo platform leader Wang Jingao made an overview of the Apollo 2.5 platform for everyone to let foreign developers learn more about Apollo 2.5.

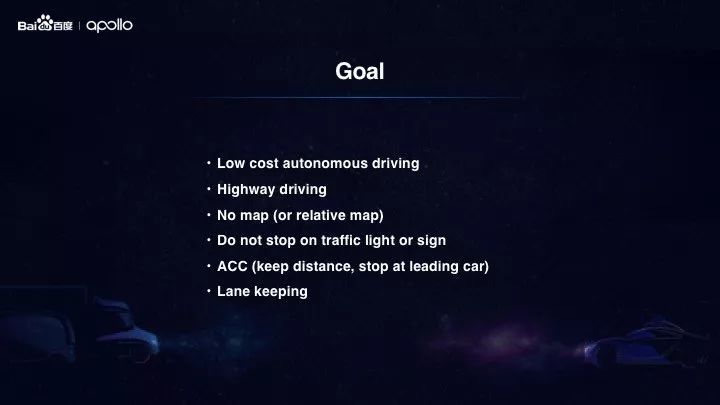

Apollo 2.5 unlocks limited-area visual high-speed autopilot, providing developers with more scenarios, lower cost, and higher performance capabilities, opening up visual perception, real-time relative maps, high-speed planning and control, and greater efficiency Development tools.

There are currently 9000+ developers recommending Apollo on GitHub. More than 2,000 partners use Apollo. Developers have contributed 20w+ lines of code on Apollo. More and more developers have built their own automated driving systems through Apollo.

In addition, Apollo can also support a variety of vehicle models and application scenarios including passenger cars, trucks, buses, logistics vehicles, and sweepers.

Vision-Based Perception for Autonomous Driving

Tae Eun

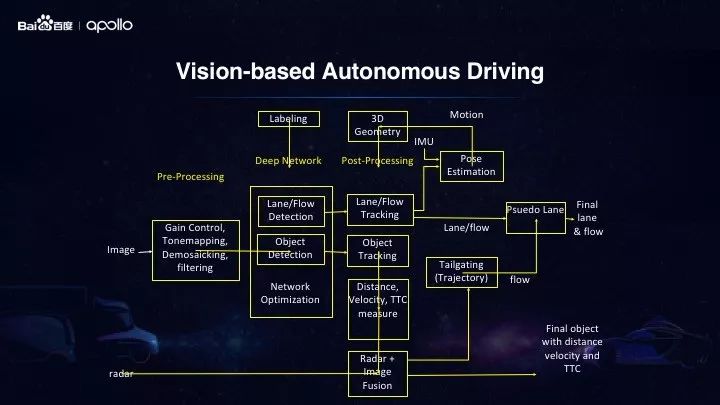

In Apollo 2.5, based on the perception of the camera, the sensor scheme is simplified and the perception is enhanced. In this Meetup, Tae Eun, a senior architect from Apollo's research team, shared a low-cost visual perception solution for the camera.

Apollo 2.5 offers multiple integrated wide-angle cameras with Lidar, millimeter-wave radar solutions. Among them, the low cost solution of the monocular wide-angle camera and the millimeter-wave radar has reduced the cost by 90% compared with the hardware configuration of the 64-line laser radar + wide-angle camera + millimeter-wave radar in the previous 2.0. This substantial cost savings, coupled with efficient sensing algorithms, will greatly aid the testing of freeway driving in limited areas.

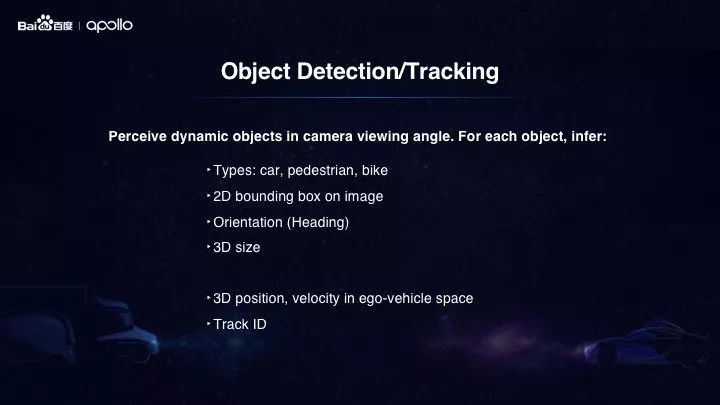

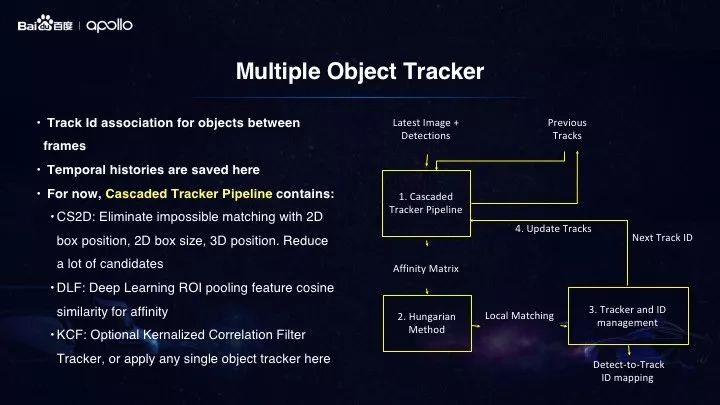

In addition, the reduction in sensors does not degrade the perceived performance. In Apollo 2.5 we used Yolo's multitask deep learning neural network to detect and classify obstacles and lane lines.

Perceptual input is a video frame captured from the camera and we can think of it as a series of static 2D images, so how to calculate the 3D properties of obstacles from these views is the key issue. In our approach, we first use a deep learning neural network to identify obstacles in a 2D image, by observing the angle of view of the bounding box and obstacles. We can then use the line-segment algorithm to reconstruct 3D obstacles with camera ray and camera calibration. The entire process can be completed in less than 0.1 milliseconds.

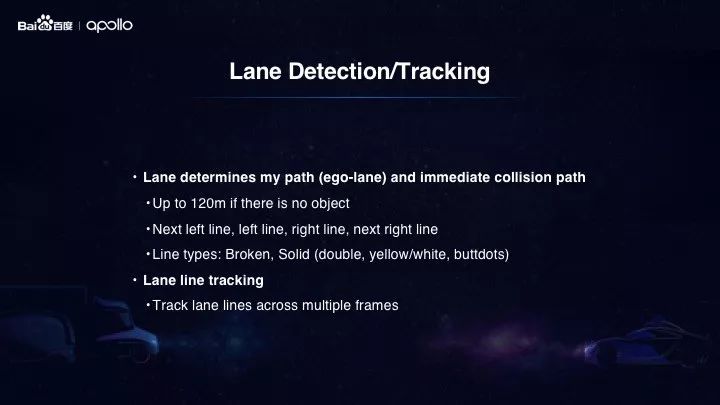

Finally, let's take a look at the processed lane line logic. First, each pixel of the 2D image is scanned with a deep learning neural network to determine if it belongs to a roadway in order to generate a pixelated lane line. Then, using the connection analysis, we can connect the pixels of the adjacent lane lines to complete the connection of the entire lane segment, and ensure the smoothness of the lane line through polynomial fitting. Based on the current vehicle position, we can derive the semantic meaning of the lane line: whether it is a left, right or adjacent lane line. Then convert the lane line to the vehicle coordinate system and send it to other required modules.

Epoxy Resin Seal Sensor

Epoxy resin seal type NTC temperature sensor with the properties of good stability, fast response, temperature resistance, convenient to use, has been already applied to air conditioner, automotive, electrical appliance and induction cooker. Temperature range is from -30°C to 105°C.

Epoxy Resin Seal Sensor,Stability Sensor,Greenhouse Sensors,Bus Sensor

Feyvan Electronics Technology Co., Ltd. , https://www.fv-cable-assembly.com